VRBench: A Benchmark for Multi-Step

Reasoning in Long Narrative Videos

Abstract

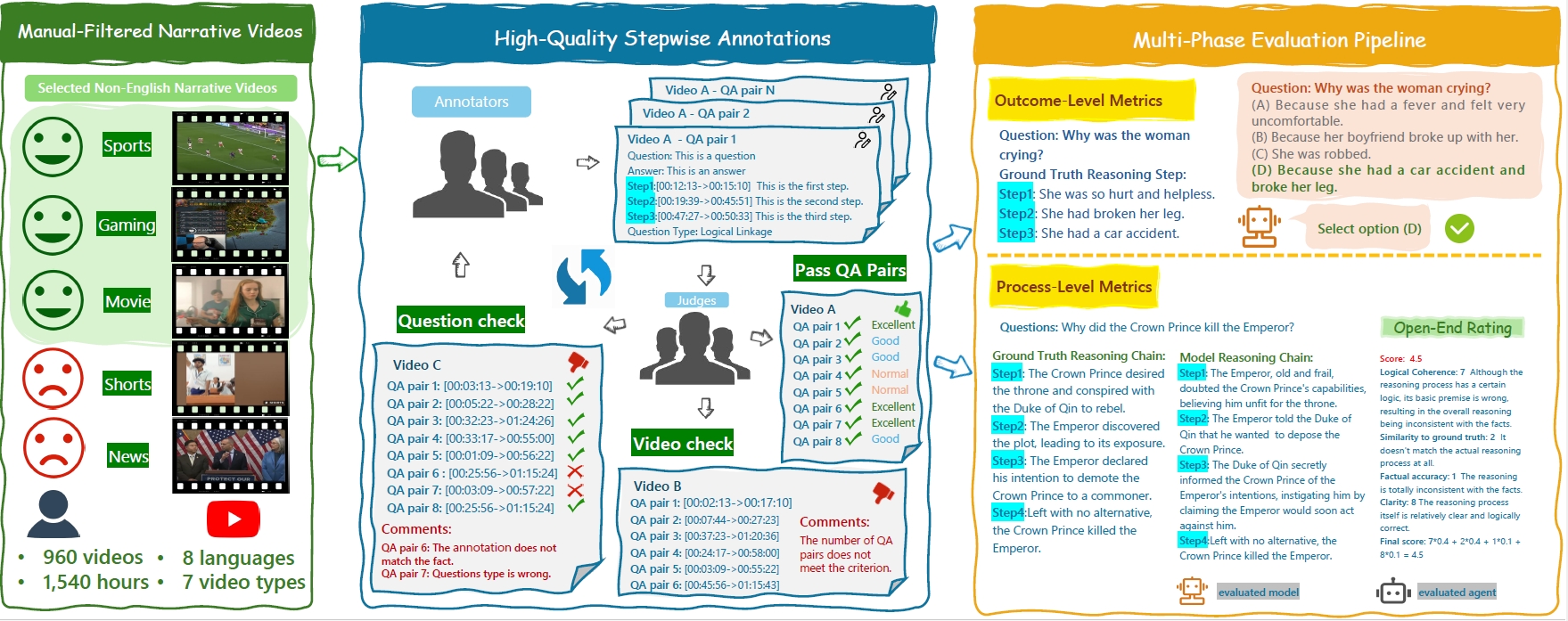

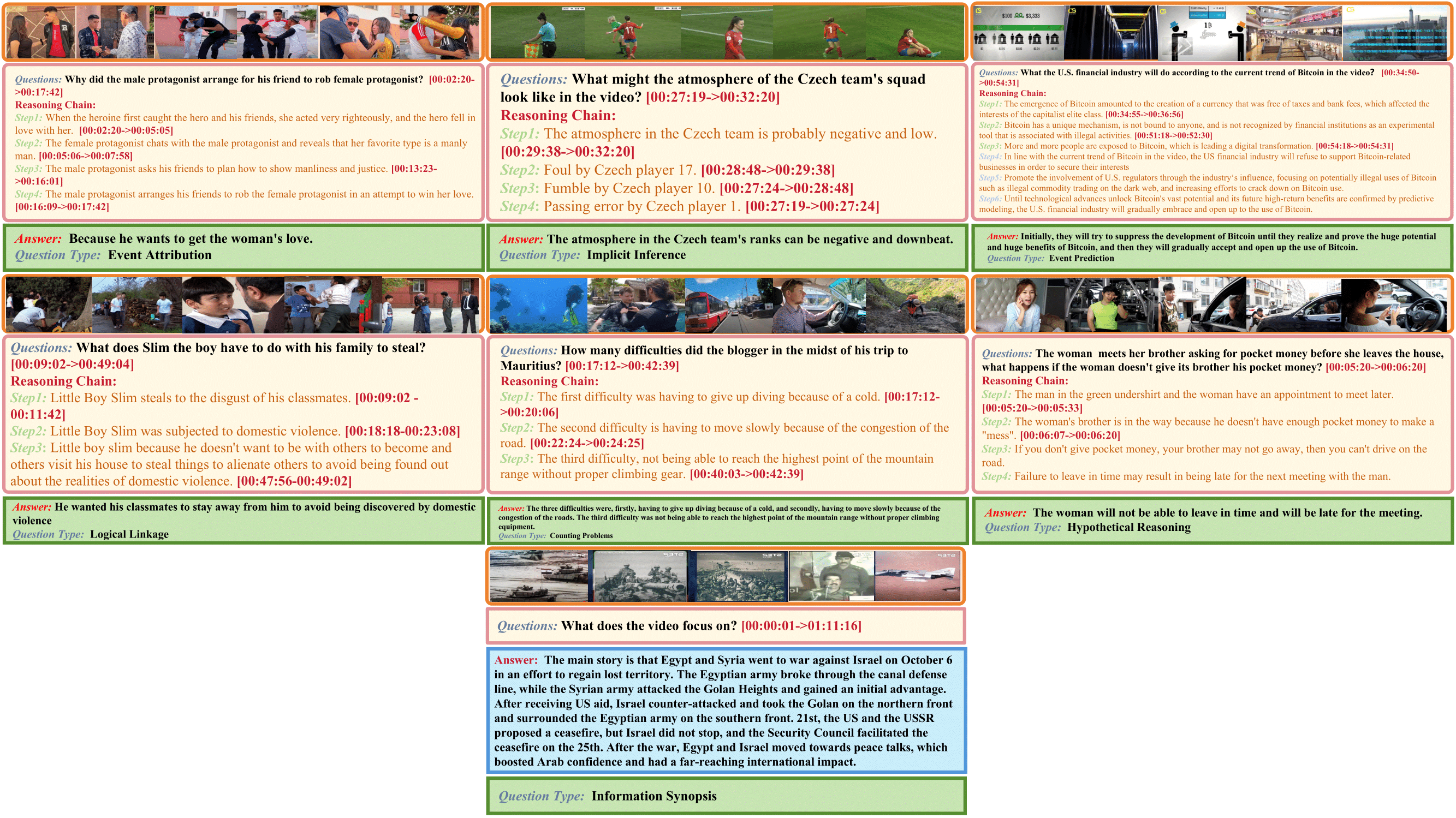

We present VRBench, the first long narrative video benchmark crafted for evaluating large models' multi-step reasoning capabilities, addressing limitations in existing evaluations that overlook temporal reasoning and procedural validity. It comprises 960 long videos (with an average duration of 1.6 hours), along with 8,243 human-labeled multi-step question-answering pairs and 25,106 reasoning steps with timestamps. These videos are curated via a multi-stage filtering process including expert inter-rater reviewing to prioritize plot coherence. We develop a human-AI collaborative framework that generates coherent reasoning chains, each requiring multiple temporally grounded steps, spanning seven types (e.g., event attribution, implicit inference). VRBench designs a multi-phase evaluation pipeline that assesses models at both the outcome and process levels. Apart from the MCQs for the final results, we propose a progress-level LLM-guided scoring metric to evaluate the quality of the reasoning chain from multiple dimensions comprehensively. Through extensive evaluations of 12 LLMs and 19 VLMs on VRBench, we undertake a thorough analysis and provide valuable insights that advance the field of multi-step reasoning.

VRBench Evaluation Results

| Model | Overall | Results by Metric | Results by Taxonomy | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| MCQ-O (Outcome) |

OE-P (Process) |

EA (Event Attribution) |

CP (Counting Problems) |

HR (Hypothetical Reasoning) |

II (Implicit Inferences) |

IS (Information Synopsis) |

EP (Event Prediction) |

LL (Logical Linkage) |

||

| LLMs | ||||||||||

| Proprietary Models | ||||||||||

| GPT-4o | 55.49 | 63.87 | 47.11 | 54.41 | 32.63 | 69.03 | 61.47 | 71.83 | 66.37 | 66.19 |

| Claude-3.7-Sonnet | 58.07 | 62.91 | 53.23 | 55.87 | 35.11 | 69.83 | 63.29 | 72.61 | 67.91 | 67.89 |

| o1-preview | 60.14 | 68.47 | 51.81 | 56.97 | 35.87 | 70.51 | 64.81 | 73.13 | 68.27 | 69.41 |

| Gemini-2.0-Flash-Thinking | 60.97 | 67.53 | 54.41 | 58.61 | 38.11 | 72.09 | 67.57 | 74.19 | 70.43 | 71.13 |

| Open-Source Models | ||||||||||

| QwQ-32B-preview | 35.90 | 27.51 | 44.29 | 34.31 | 34.41 | 46.29 | 39.67 | 27.97 | 42.39 | 40.61 |

| InternLM3-8B-Instruct | 47.81 | 50.31 | 45.31 | 45.47 | 34.87 | 60.33 | 51.77 | 55.47 | 56.97 | 56.81 |

| Qwen2.5-7B-Instruct | 48.29 | 52.61 | 43.97 | 46.93 | 35.17 | 61.83 | 51.57 | 54.87 | 56.63 | 57.03 |

| Llama3.3-70B-Instruct | 49.84 | 52.59 | 47.09 | 47.63 | 38.07 | 63.87 | 54.03 | 54.83 | 59.47 | 56.97 |

| QwQ-32B | 52.52 | 56.01 | 49.03 | 49.53 | 36.03 | 62.01 | 54.03 | 52.03 | 58.01 | 59.03 |

| Qwen2.5-72B-Instruct | 53.51 | 60.49 | 46.53 | 51.03 | 36.01 | 67.03 | 58.53 | 67.83 | 61.93 | 63.03 |

| DeepSeek-V3 | 56.06 | 64.79 | 47.33 | 54.03 | 36.57 | 69.97 | 61.83 | 69.53 | 65.53 | 65.43 |

| DeepSeek-R1 | 57.13 | 64.19 | 50.07 | 56.13 | 38.91 | 72.81 | 64.13 | 68.01 | 68.53 | 67.93 |

| VLMs | ||||||||||

| Proprietary Models | ||||||||||

| Claude-3.7-Sonnet | 68.15 | 80.09 | 56.21 | 63.11 | 32.97 | 72.63 | 71.13 | 75.37 | 71.33 | 70.27 |

| GPT-4o | 68.68 | 81.23 | 56.13 | 66.61 | 36.51 | 76.67 | 70.47 | 78.03 | 72.03 | 73.17 |

| Gemini-2.0-Pro | 74.61 | 83.29 | 65.93 | 71.09 | 65.21 | 81.01 | 75.73 | 87.13 | 77.93 | 75.89 |

| Open-Source Models | ||||||||||

| DeepSeek-VL2 | 31.50 | 33.27 | 29.73 | 25.93 | 22.41 | 35.73 | 31.73 | 30.01 | 33.29 | 29.57 |

| H2OVL Mississippi-2B | 47.15 | 52.33 | 41.97 | 35.17 | 32.37 | 51.71 | 41.41 | 60.03 | 49.97 | 41.91 |

| Phi-3.5-Vision | 48.52 | 58.03 | 39.01 | 31.53 | 28.03 | 45.03 | 37.03 | 68.03 | 44.03 | 36.03 |

| LongVA-7B | 50.14 | 67.81 | 32.47 | 27.61 | 25.07 | 38.69 | 33.77 | 76.91 | 38.27 | 32.07 |

| InternVL2.5-8B | 50.41 | 69.31 | 31.51 | 26.63 | 26.31 | 37.99 | 31.21 | 85.97 | 37.37 | 30.69 |

| MiMo-VL-7B-RL | 50.48 | 63.39 | 37.57 | 47.31 | 34.59 | 63.83 | 53.23 | 74.61 | 60.33 | 56.97 |

| VideoChat-Flash-7B | 50.82 | 72.01 | 29.63 | 24.69 | 21.41 | 39.37 | 29.17 | 79.37 | 34.87 | 28.07 |

| InternVideo2.5 | 51.94 | 75.63 | 28.25 | 23.81 | 24.09 | 34.51 | 27.07 | 84.57 | 33.61 | 26.81 |

| LongVA-7B-DPO | 52.36 | 67.91 | 36.81 | 30.73 | 27.17 | 45.09 | 38.23 | 79.39 | 43.67 | 37.03 |

| Qwen2-VL-7B | 54.08 | 72.01 | 36.15 | 30.49 | 26.61 | 46.71 | 33.69 | 85.27 | 43.31 | 35.29 |

| Aria | 54.55 | 72.97 | 36.13 | 30.19 | 29.23 | 44.23 | 34.63 | 86.23 | 44.99 | 34.57 |

| Qwen2.5-VL-7B | 56.52 | 69.61 | 43.43 | 37.07 | 33.17 | 54.27 | 42.27 | 83.91 | 50.17 | 42.87 |

| Keye-VL-8B-Preview | 60.44 | 64.41 | 56.47 | 62.53 | 40.37 | 73.61 | 67.99 | 70.13 | 71.83 | 71.03 |

| Qwen2.5-VL-72B | 61.71 | 66.85 | 56.57 | 51.87 | 46.13 | 67.13 | 54.13 | 90.04 | 63.67 | 60.77 |

| Kimi-VL-A3B-Thinking-2506 | 61.82 | 61.67 | 61.97 | 64.53 | 47.91 | 71.37 | 69.57 | 74.47 | 71.23 | 71.47 |

| InternVL2.5-78B | 62.31 | 76.61 | 48.01 | 43.77 | 38.67 | 58.21 | 46.13 | 87.53 | 54.43 | 47.57 |

BibTeX

If you find our work useful, please consider citing our paper:

@article{yu2025vrbench,

title={VRBench: A Benchmark for Multi-Step Reasoning in Long Narrative Videos},

author={Yu, Jiashuo and Wu, Yue and Chu, Meng and Ren, Zhifei and Huang, Zizheng and Chu, Pei and Zhang, Ruijie and He, Yinan and Li, Qirui and Li, Songze and others},

journal={arXiv preprint arXiv:2506.10857},

year={2025}

}